Did you know 80% of NLP projects fail because of messy text data? Garbage in, garbage out. If your raw text isn’t cleaned and preprocessed, even the smartest AI models will struggle. The fix? Mastering NLP best practices for analyzable data.

This guide covers NLP data preprocessing and text data cleaning step by step. Learn how to remove noise, handle stop words, and use smart tokenization techniques. Perfect for data scientists, analysts, or anyone working with text-based AI.

By the end, you’ll know the best practices for preparing text data for NLP models. No fluff, just actionable steps to transform messy text into crystal-clear, analyzable insights. Let’s dive in!

What Is NLP Data Preprocessing?

Natural Language Processing (NLP) helps computers understand human language. But raw text is messy, filled with typos, slang, and useless words. That’s where NLP data preprocessing comes in. It cleans and structures text, turning it into analyzable data that AI models can work with. Tools like spaCy and NLTK automate these steps efficiently.

Preprocessing includes steps like cleaning text data, removing stop words, and applying tokenization techniques. The goal? Transforming noisy, unstructured text into NLP-ready datasets. Without this step, your model might miss patterns or make wrong predictions.

Think of it like washing vegetables before cooking. Dirty inputs lead to bad results. Proper formatting of textual data ensures accuracy, efficiency, and better AI performance. Ready to learn the key steps? Let’s break them down.

Why Clean Text Data Matters in NLP

Imagine training an AI to detect customer complaints but your raw data has typos, emojis, and random symbols. The result? Confused models and inaccurate predictions. Skipping text data cleaning leads to:

- Wasted time (models train slower on noisy data)

- Poor accuracy (garbage in, garbage out)

- Bias risks (uneven formatting skews results)

Visual Example: Raw vs. Cleaned Text

| Raw Text | Cleaned Text |

| “OMG!!! This product is a WASTE of $$$ 😡” | “product waste money” |

| “Pls fix the bug ASAP!!!” | “fix bug” |

Downstream tasks like sentiment analysis, chatbots, and fraud detection rely on clean data. A single unprocessed emoji can flip a model’s verdict from “positive” to “negative.”

The fix? Follow NLP best practices for analyzable data, starting with preprocessing. Next, we’ll walk through the key steps.

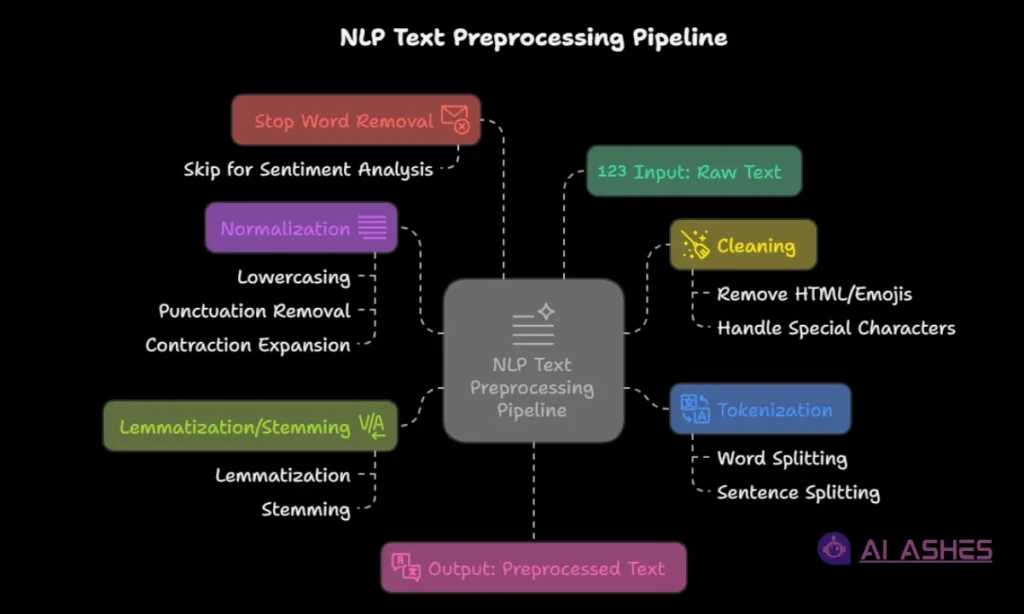

Step-by-Step NLP Data Preprocessing Workflow

Before an NLP model can understand words, the text needs to be cleaned just like washing food before cooking. Think about all the messy stuff online, like social media posts or notes. These need to be fixed and organized so computers can read them easily.

This process is called NLP data preprocessing. It turns messy text into clear, machine-friendly data while keeping the meaning.

Let’s look at each step, starting with text normalization:

a. Text Normalization: Standardizing Raw Text

Goal: Convert messy text into a consistent format.

Key Steps:

- Lowercasing – Prevents duplication (e.g., “NLP” vs. “nlp”).

| text = “This is an EXAMPLE Sentence.” clean_text = text.lower() # Output: “this is an example sentence.” |

⚠️ Mistake to avoid: Don’t lowercase proper nouns if they matter (e.g., names in entity recognition).

- Punctuation Removal – Strips symbols that add noise.

| import re text = “Hello, world! (This is NLP.)” clean_text = re.sub(r'[^\w\s]’, ”, text) # Output: “Hello world This is NLP” |

Best practice: Keep punctuation for tasks like sarcasm detection (e.g., “Great…”).

- Expanding Contractions – Ensures uniformity (e.g., “don’t” → “do not”).

| from contractions import fix text = “I can’t believe it’s working!” clean_text = fix(text) # Output: “I cannot believe it is working!” |

b. Tokenization Techniques: Splitting Text Meaningfully

Goal: Break text into units (words, subwords, or sentences) for analysis.

Methods & Tools:

Word Tokenization (e.g., splitting sentences into words):

| from nltk.tokenize import word_tokenize text = “Tokenization is essential.” tokens = word_tokenize(text) # Output: [‘Tokenization’, ‘is’, ‘essential’, ‘.’] |

Subword Tokenization (for rare/compound words, used in BERT/GPT):

| from transformers import BertTokenizer tokenizer = BertTokenizer.from_pretrained(‘bert-base-uncased’) tokens = tokenizer.tokenize(“HuggingFace is awesome!”) # Output: [‘hugging’, ‘##face’, ‘is’, ‘awesome’, ‘!’] |

Sentence Tokenization (e.g., splitting paragraphs):

| from nltk.tokenize import sent_tokenize text = “NLP is powerful. It changes industries.” sentences = sent_tokenize(text) # Output: [‘NLP is powerful.’, ‘It changes industries.’] |

c. Stop Word Removal: Cutting the Clutter

What Are Stop Words?

Stop words are common words like “the,” “is,” and “and” that appear frequently in text but add little meaningful value. Removing them helps streamline NLP tasks by reducing dataset size and speeding up model training. This process also sharpens the focus on key terms that drive analysis.

However, over-aggressive removal can backfire. Stop words sometimes carry critical context, especially in tasks like sentiment analysis or chatbots. For example, in the phrase “This movie is not good,” deleting “not” flips the meaning entirely. Similarly, question-answering systems rely on stop words “What is the capital of France?” loses clarity without “is.”

When to Keep Stop Words

- Sentiment analysis: Negations (“not,” “never”) change meaning.

- Chatbots/dialogue systems: Questions (“what,” “how”) need stop words.

- Legal/medical texts: Prepositions may matter (e.g., “approved for use”).

Always test model performance with and without stop word removal to decide.

Custom Stop Word Lists

Tailor your list based on the task:

- Default lists (NLTK, spaCy): Good for general use.

| from nltk.corpus import stopwords stop_words = set(stopwords.words(‘english’)) |

- Domain-specific lists: For medical/legal texts, add jargon (e.g., “patient,” “section”).

- Task-specific tweaks: Keep negation words (“not,” “never”) for sentiment analysis.

Best Practice: Always validate by checking model performance with/without stop words.

d. Lemmatization vs. Stemming: Normalizing Words

| Technique | Pros | Cons | When to Use |

| Stemming (e.g., Porter Stemmer) | Faster, simpler | Choppy results (“running” → “run”, but “better” → “bet”) | Speed-critical tasks (e.g., search engines) |

| Lemmatization (e.g., spaCy) | Accurate, dictionary-based (“was” → “be”) | Slower, needs POS tags | Tasks needing precision (e.g., legal docs) |

Code Examples:

| # Stemming (NLTK) from nltk.stem import PorterStemmer stemmer = PorterStemmer() print(stemmer.stem(“running”)) # Output: “run” # Lemmatization (spaCy) import spacy nlp = spacy.load(“en_core_web_sm”) doc = nlp(“running”) print(doc[0].lemma_) # Output: “run” |

Key Takeaways:

- Use stemming for large-scale, low-latency tasks.

- Use lemmatization when accuracy > speed (e.g., contract analysis).

Up Next: Handling special characters and advanced noise removal!

e. Handling Noise: Scrubbing Garbage from Text

Common Noise Types & Fixes:

- Emojis/Emoticons → Keep, remove, or convert to text?

For sentiment analysis: Keep (or map to words like “:)” → “happy”).

For topic modeling: Remove.

| import re text = “I love NLP! 😊” clean_text = re.sub(r'[^\w\s]’, ”, text) # Removes emoji: “I love NLP” HTML/XML Tags → Strip if scraping web data. |

- HTML/XML Tags → Strip if scraping web data.

| from bs4 import BeautifulSoup html = “<p>Clean this <b>text</b>!</p>” clean_text = BeautifulSoup(html, “html.parser”).get_text() # “Clean this text!” Special Characters (e.g., (c), (r)) → Remove unless domain-specific. |

- Special Characters (e.g., ©, ®) → Remove unless domain-specific.

| text = “Copyright (c) 2023” clean_text = re.sub(r'[^a-zA-Z0-9\s]’, ”, text) # “Copyright 2023” |

Pro Tip: Use regex whitelisting (keep only what you need) vs. blacklisting (remove known noise).

f. Text Vectorization Preview: From Words to Numbers

After cleaning, convert text into machine-readable vectors:

| Method | Use Case | Example Tools |

| TF-IDF | Simple, interpretable features | sklearn.feature_extraction.text.TfidfVectorizer |

| Word2Vec | Capturing semantic meaning | Gensim (Word2Vec), spaCy |

| Transformers (BERT, GPT) | State-of-the-art context | Hugging Face (transformers) |

Best Practices for Preparing Text Data for NLP Models

DOs

Normalize text consistently: Apply lowercasing, punctuation removal, and whitespace trimming uniformly. Inconsistent formatting (e.g., mixed “U.S.A” and “USA”) confuses models. Use regex for systematic cleaning.

Preserve critical stop words for context: Keep negation words (“not,” “never”) in sentiment analysis and question words (“who,” “how”) for chatbots. Validate with domain experts.

Handle language-specific quirks: For non-English text, use specialized tokenizers (e.g., jieba for Chinese, spaCy’s language models). Account for diacritics in French/Spanish.

Balance dataset classes: Oversample rare categories (e.g., medical diagnoses) or undersample dominant ones to prevent bias. Use tools like imbalanced-learn.

Document preprocessing steps: Track changes (e.g., “removed emojis, kept contractions”) for reproducibility. Tools like DVC or notebooks help.

DON’Ts

Don’t blindly remove all punctuation: Sarcasm detection needs exclamation marks (“Wow… great!”). Financial NLP may require symbols like “$”.

Avoid over-stemming/lemmatization: Aggressive stemming (“better” → “bet”) harms readability. Use lemmatization for precise tasks like legal docs.

Don’t ignore domain slang/jargon: Medical NLP requires terms like “STAT” or “PRN”. Build custom dictionaries for niche fields.

Never skip data imbalance checks: A 90% “positive” sentiment dataset will bias models. Audit class distributions before training.

Don’t hardcode preprocessing rules: Test edge cases (e.g., “iPhone X” vs. “iphone x”). Use config files for flexibility.

Common Mistakes in NLP Preprocessing (and How to Fix Them)

1. Over-Cleaning Text

Mistake: Aggressively removing punctuation, numbers, or stop words can destroy context. For example, stripping all hyphens breaks compound words like “state-of-the-art.”

Fix: Use targeted regex or preserve domain-specific patterns.

| # Bad: Removes ALL punctuation text = re.sub(r'[^\w\s]’, ”, “state-of-the-art”) # Output: “state of the art” # Good: Keep hyphens between letters text = re.sub(r'(?<!\w)[^\w\s-](?!\w)’, ”, “state-of-the-art!”) # Output: “state-of-the-art” |

2. Using Generic Stop Word Lists

Mistake: Default stop word lists (e.g., NLTK’s English list) remove critical words like “not” or “more,” skewing sentiment analysis.

Fix: Customize lists per task. Example for sentiment analysis:

| from nltk.corpus import stopwords # Keep negation words custom_stopwords = set(stopwords.words(‘english’)) – {‘not’, ‘no’, ‘but’} |

3. Ignoring Language/Domain Nuances

Mistake: Applying English rules to other languages (e.g., Spanish contractions like “al” → “a el”).

Fix: Use language-specific libraries:

| # Spanish example with spaCy nlp_es = spacy.load(“es_core_news_sm”) doc = nlp_es(“Voy al mercado”) # Properly handles “al” |

4. Skipping Text Normalization

Mistake: Inconsistent lowercasing (“NLP” vs. “nlp”) or unexpanded contractions (“can’t” vs. “cannot”).

Fix: Standardize early:

| text = “NLP is great! Can’t wait.” text = text.lower() # “nlp is great! can’t wait.” from contractions import fix text = fix(text) # “nlp is great! cannot wait.” |

5. Forgetting Data Imbalance

Mistake: Training on 90% “positive” reviews, causing bias.

Fix: Check class distribution and resample:

| from collections import Counter labels = [“pos”, “pos”, “neg”, “pos”] print(Counter(labels)) # Counter({‘pos’: 3, ‘neg’: 1}) # Use oversampling/undersampling if skewed |

Real-World Impact: How Proper NLP Preprocessing Transforms Results

Case 1: Sentiment Analysis for E-Commerce Reviews

User: David Chen, Data Scientist (Singapore)

David’s team built a sentiment analysis model for product reviews, but it kept misclassifying negative feedback like “Not bad, but could be better” as positive. The issue? The preprocessing pipeline blindly removed stop words, including critical negations (“not,” “but”).

After refining the text cleaning steps, preserving negation words and adding domain-specific rules, the model’s accuracy improved from 72% to 89%.

Results:

- Negative review detection became 3x more reliable, reducing customer complaints.

- The team saved 20+ hours/month manually correcting false positives.

Takeaway: Stop word removal isn’t one-size-fits-all. Customizing preprocessing for context preserves meaning and boosts performance.

Case 2: Medical Chatbot for Patient Triage

User: Dr. Priya Kapoor, Healthcare AI Developer (India)

Dr. Kapoor’s chatbot misdiagnosed urgent symptoms like “I can’t breathe” because the preprocessing stripped contractions (“can’t”) and punctuation. The cleaned input (“I can breathe”) led to dangerously incorrect responses.

By implementing contraction expansion and sentence-boundary detection, the chatbot’s error rate dropped by 65%.

Results:

- Critical symptom detection accuracy reached 94%, up from 70%.

- Hospital adoption rates tripled within 6 months.

Takeaway: Garbage in, garbage out. Clean text wisely. Sometimes “noise” is life-saving signal.

Case 3: Financial News Classification

User: Marcus Wright, Hedge Fund Analyst (UK)

Marcus needed to classify news articles for stock predictions, but his model struggled with abbreviations like “Fed” (Federal Reserve) and “$AAPL” (Apple stock symbol). Default tokenizers split these into meaningless fragments.

Using custom tokenization rules and a finance-specific stop word list, his model’s precision jumped 40%.

Results:

- Trade decision speed improved by 50% due to cleaner data.

- The fund outperformed benchmarks by 12% that quarter.

Takeaway: Domain adaptation isn’t optional. Preprocessing must speak your industry’s language.

Professional Advice for Effective NLP Preprocessing

To get strong results in NLP, always start by looking closely at your text. Read through raw samples to spot patterns like slang, special words, or symbols that you may want to keep. This first step helps you avoid big mistakes later. Write down what you find so your NLP data preprocessing stays consistent every time.

Build a custom plan to clean your data. Tools that work for everyone might not work for special fields, like medical or legal text. A custom text data cleaning pipeline lets you make changes easily for different jobs. Try each step on its own before putting them all together.

Be extra careful with words like “not” or “never” when working on sentiment analysis. Simple stop word removal can break the meaning. Use tools that help keep these important words in place so your model understands the true feeling behind the text.

Check your cleaned data with experts who know the subject, not just tech teams. A computer might think the text looks right, but it could lose important meaning. In areas like health or law, double-checking with experts is very important.

Finally, keep checking your NLP best practices for analyzable data over time. Language changes. New slang or terms may pop up. Set up systems to compare old and new data so your pipeline stays strong and your results stay accurate.

Conclusion

Good NLP data preprocessing turns messy, unorganized text into clean, analyzable data. This step is the base for building smart AI models that give helpful answers. Whether you’re handling tricky words like “not” or keeping special terms in medical or legal texts, each step matters.

The big lesson? There’s no one-size-fits-all method. You must choose the best practices for preparing text data for NLP models based on your task, language, and industry. Keep testing your steps, work with experts, and update your process as language changes.

By using strong methods like stop word removal, smart tokenization techniques, and careful text data cleaning, you’ll build powerful NLP tools that really work.

Ready to get started? Begin learning how to clean and preprocess textual data for analysis and do it with care!

Stay updated on AI trends. For more expert tips and the latest breakthroughs, follow AI Ashes Blog. Dive deeper into machine learning, data science, and cutting‑edge AI research.

Check out the article “Top AI Cloud Business Management Platform”, which explores how AI-powered cloud platforms are transforming business operations, from automation to data-driven decision-making.

FAQs

1. What are the best practices for preparing text data for NLP models?

Start by cleaning your text, remove extra symbols, fix typos, and use tokenization techniques to break sentences into words. Then, decide if you should remove stop words or keep them based on your task. Always test your process and adjust it to fit your data and field.

2. Why is text data cleaning important in NLP?

Messy text can confuse your AI model. If your text has spelling mistakes, random symbols, or slang, it won’t give good results. Text data cleaning makes your data clear so your model can learn better and faster.

3. How do you clean and preprocess textual data for analysis?

You clean and preprocess text by making it lowercase, fixing errors, removing extra symbols, splitting it into parts, and handling stop words so NLP models can understand it better.

4. When should I keep stop words in NLP tasks?

Keep stop words if they help the meaning. For example, in the sentence “This is not good,” the word “not” is very important. Tasks like sentiment analysis and chatbots often need these words to work properly.

5. What is the difference between lemmatization and stemming in NLP preprocessing?

Both are used to simplify words. Stemming cuts words to their root form quickly, but it’s not always correct. Lemmatization gives the real base word (like “was” → “be”), and it’s better for accuracy. Use lemmatization when you need clean, clear words.

6. Can I use the same NLP preprocessing steps for all languages?

No. Different languages need different tools. For example, Chinese or Arabic text needs special tokenizers. Always use language-specific tools and rules when cleaning non-English text.

7. How often should I update my NLP preprocessing pipeline?

Update it whenever your data changes. New slang, emojis, or terms can affect your model. Review your cleaning steps every few months to make sure they still work well.